Haar Cascade is a machine learning object detection algorithm used to identify objects in an image or video, and is based on the concept of features detection proposed by Paul Viola and Michael Jones in their original paper Rapid Object Detection using a Boosted Cascade of Simple Features (2001). It is a machine learning based approach where a cascade function is trained from a lot of positive and negative images. The cascade is then used to detect objects in other images.

in redCV the implementation does not include the training part of the algorithm. The cascade classifier is a simplified implementation which uses pre-trained parameters for the cascade classifier. redCV cascades files are similar to OpenCV xml files, but are text files for a faster access.

In original Viola-Jones cascade (stump-based cascade), each tree is composed of 3 nodes at max. Each node has no leaf, and left and right values are used for decision. Features can be tilted. In the improved version (Rainer Lienhart and Jochen Maydt, tree-based cascade), nodes can have left and right leaves. If the calculated value is less than the node threshold, we have to go to the next left or right node.

redCV uses 23 parameters by stage with the support of stump and tree-based cascades. Titled features are also supported. All information is inside the classifier text file:

[Header] Section

how many stages are in the cascaded filter?

the second line of file is the number of stages

how many filters in each stage?

They are specified starting from third line

Filters are then defined in [Nodes] section

First line: window training size

then each stage of the cascaded filter has:

23 parameters per filter + 1 threshold parameter per stage

The 23 parameters for each filter are:

1 to 4: coordinates of rectangle 1

5: weight of rectangle 1

6 to 9: coordinates of rectangle 2

10: weight of rectangle 2

11 to 14: coordinates of rectangle 3 (default 0)

15: weight of rectangle 3 (default 0.0)

16: tilted flag

17: threshold of the filter

18: alpha 1 of the filter ; left node value

19: alpha 2 of the filter ; right node value

20: has left node?

21: left node value; 0, 1 or 2

22: has right node?

23: right node value; 0, 1 or 2

Part of redCV code is based on C code sample, developed by Francesco Comaschi (http://www.es.ele.tue.nl/video/). redCV object detection is a mix of Red/System and Red language since we need structures, pointers and large arrays for robust and fast execution. Code is rather good for detecting faces in images and videos. redCV uses several techniques:

Image pyramid

This is a multi-scale representation of an image, such that the object detection can be scale-invariant, for detecting large and small objects inside the same detection window. In the redCV code, the image pyramid is implemented by down-sampling the image with neighboring pixels algorithm.

rcvNearestNeighbor routine implements up and down-sampling. Nearest neighbor is the simplest and fastest implementation of image scaling technique. It is very useful when speed is the main concern. The principle in image scaling is to have a source image and using this image as the base to construct a new scaled image. The destination image will be smaller, larger, or equal in size depending on the scaling ratio. When enlarging an image, we add empty pixels in the original base picture. Up-sampling algorithm is to find appropriate value to use for the modified image, and to fill all those spaces with color. The empty pixels will be replaced with the nearest neighboring pixel. Down-sampling, in the other hand, involves reduction of pixels. In this case scaling algorithm is to find the best pixels to throw away.

The information we need are both the horizontal and vertical ratios between the original image and the to be scaled image, given by this equation.

Integral image and Haar features

As explained in redCV manual, integral image (or summed area table) is a way to sum up the pixel values within a rectangular region only defined by 4 points, which becomes very efficient if we need to calculate the pixels values of many regions of interest within an image. For a discrete image i its integral image ii at the pixel (x, y) is defined as the sum of the pixel values of i above and to the left of (x, y), according to this equation:

Given the integral image, the sum of all the pixels within a rectangle aligned with the image axes can be evaluated with only four array references, regardless of the size of the rectangle. On the other hand, Haar feature considers adjacent rectangular regions at a specific location in a detection window, sums up the pixel intensities in each region and calculates the difference between these sums.

In redCV, integral images are used in association with Haar features to make a fast and efficient computation.

Sliding Window

The algorithm uses a sliding window that shifts around the whole image at each scale to detect the objects, as shown in the next figure. In redCV implementation, the sliding window shifts pixel-by-pixel by default, but this step can be increased for a faster processing. Generally, the size of the sliding window corresponds to the size of the window used for training the cascade.

Cascade classifier

Each time the window shifts, the image region within the window will go through the cascade classifier which consists of several stages of filters. This is done stage-by-stage. Each stage of the classifier labels the region defined by the current location of the sliding window as either positive or negative according to the stage threshold value. Positive value indicates that an object was found and negative value indicates no objects were found. If the label is negative, the classification of this region is complete, and the cascade classifier will immediately reject the region as an object, and the detector slides the window to the next location. If the label is positive, the classifier passes the region to the next stage. The detector reports an object to be found at the current window location when the final stage classifies the region as positive. This means that if a region succeeds all stages, it will be classified as a candidate, which may be refined by further post-processing.

Lastly, post-processing includes a distance-based clustering of rectangle candidates in order to select the best candidate.

redCV implementation of objects detection is optimized with Red/system structures and routines and is really comparable to C++ implementation in OpenCV.

faceDetection.red is a generic program devoted to face detection in image. The code uses a lot of parameters for a fine-tuning of object detection (see redCV manual for detail). Code was tested with a lot of different images and the results are pretty good.

Let’s begin with a simple image with one face which is identified in only 40 ms vs. 20 ms for a C++ code with OpenCV.

The code can also identify right, left or both eyes with, and without glasses.

This new image is interesting since faces are large or small due to the perspective, and some faces are slightly tilted or rotated. All faces are identified. This means that image pyramid implemented in redCV is rather good.

redCV implementation is also efficient for important number of faces and a large image: only 1180 ms for identifying 29 faces in a 1280x626 pixels image.

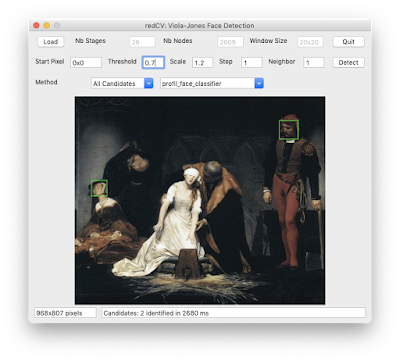

The last sample shows that redCV Haar cascade classifier can also be used for complex scenes as in this Paul Delaroche’s oil painting. Task was rather difficult since the painting contains intensely dark areas, and Jane Grey’s face is masked. Most of faces are tilted and rotated (e.g. the ladies and the lieutenant of the London tower).

All code for face recognition will be updated within a few days here: https://github.com/ldci/redCV

redCV is updated now.

redCV is updated now.

© François Jouen & Red Foundation 2020